When models are deceptively bad.

Posted on Tue 17 Sep 2024 in data-analysis

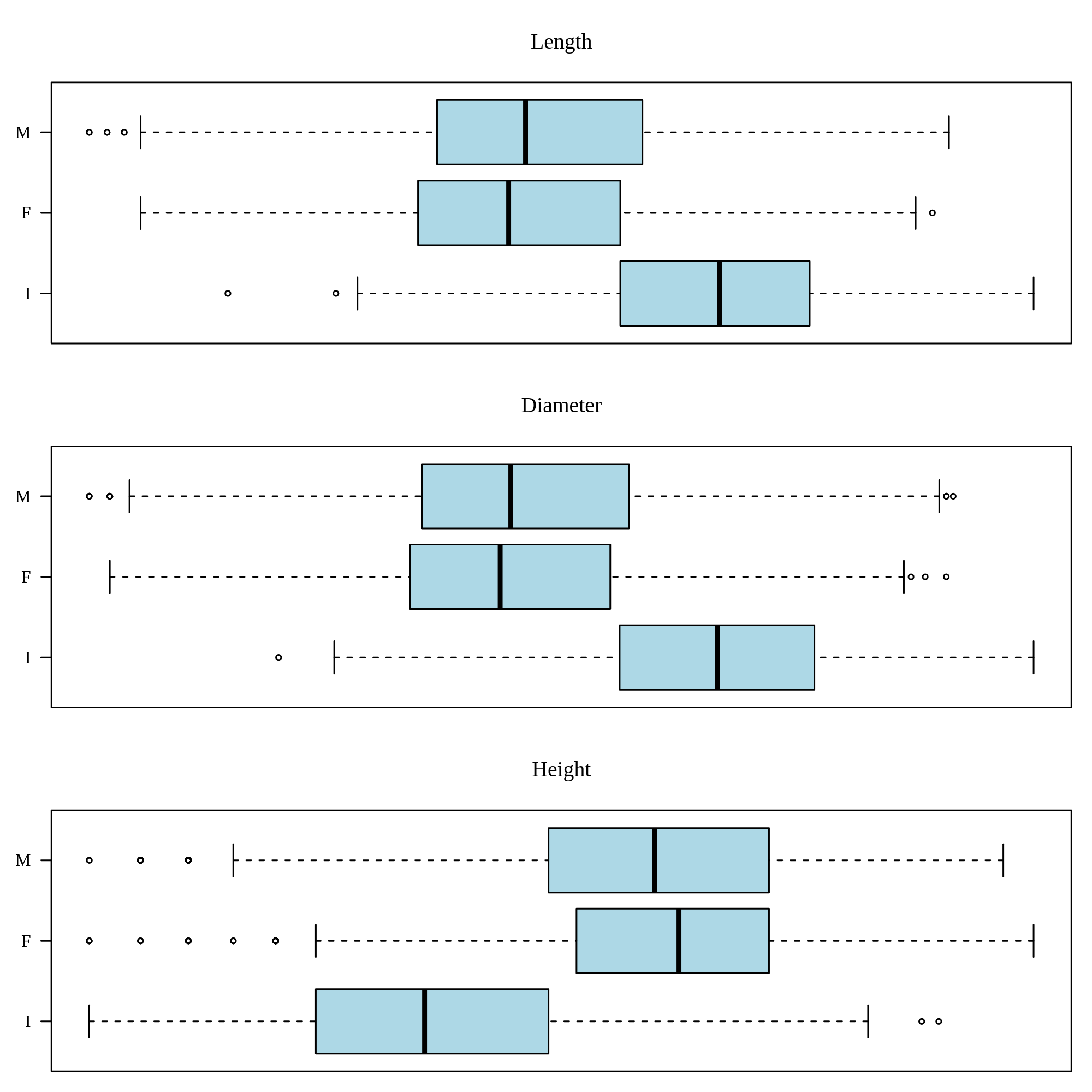

I was once tasked with fitting some machine learning models in R to a subset of the well-known abalone dataset, then recommending the best one. The aim was to use non-invasive measurements (like dimensions and weight) to classify their sex.

Ultimately, I recommended none of the models. Their overall accuracy percentages gave the impression that they performed kind of okay. But really, the models were garbage, as they misclassified nearly all of the female abalone.

This happened because the dimensions of male and female abalone overlapped too much i.e. they weren't separable. So, unable to differentiate, the models just guessed they were male.

Note: The 'I' category covers infant abalone whose sex has not yet been determined.

It really demonstrated to me that there is more to machine learning than knowing a bit of Python or R. The hard part is being able to see - and admit - that your model is rubbish.

Source code and report: GitHub